Software Freedom Day talk @HSBXL

Slightly over a month ago, September 20, Hacker Space Brussels (HSBXL), our local hacker space, got packed for Software Freedom Day: a celebration of what FOSS has achieved, why it’s worth pursuing further, and an opportunity to enjoy community. Talks plentiful, covering a variety of topics from digital sovereignty to federated immersive learning and open data. And robotics - thanks Jurgen Gaeremyn for the invite and Wouter Simons for the smooth organization; it was a pleasure to speak HSBXL.

Today - a write-up version of this talk. If you’re visiting here, I assume you already have some robotics experience, therefore I left out the more introductory aspects here. In case you prefer the presentation, the recording is available here.

After the introductory section How’s robotics evolving, we’ll structure the talk in four parts. First up, the Classic Stack - mostly heuristic based, and, from a scientific point of view, getting outdated. On the other hand, from an industrialization point of view, we’re reaching a point of maturity here. Most of these work rather well and are stable, being polished over the years. After that, Modern Stack explores what robotics is coming to be. Two large changes here - first, the use of AI, and second, far more integration (not splitting things up in dozens of functional blocks anymore).

Whichever of the two you prefer, the two next blocks are common for the two. In Foundations, we go to a lower abstraction level to look at what’s being used under the hood. Finally, Development Tooling covers visualization and simulation. Concerning the software projects listed today - I really had to make a selection there, and I’m sorry that I had to leave out several really nice projects.

How’s robotics evolving?

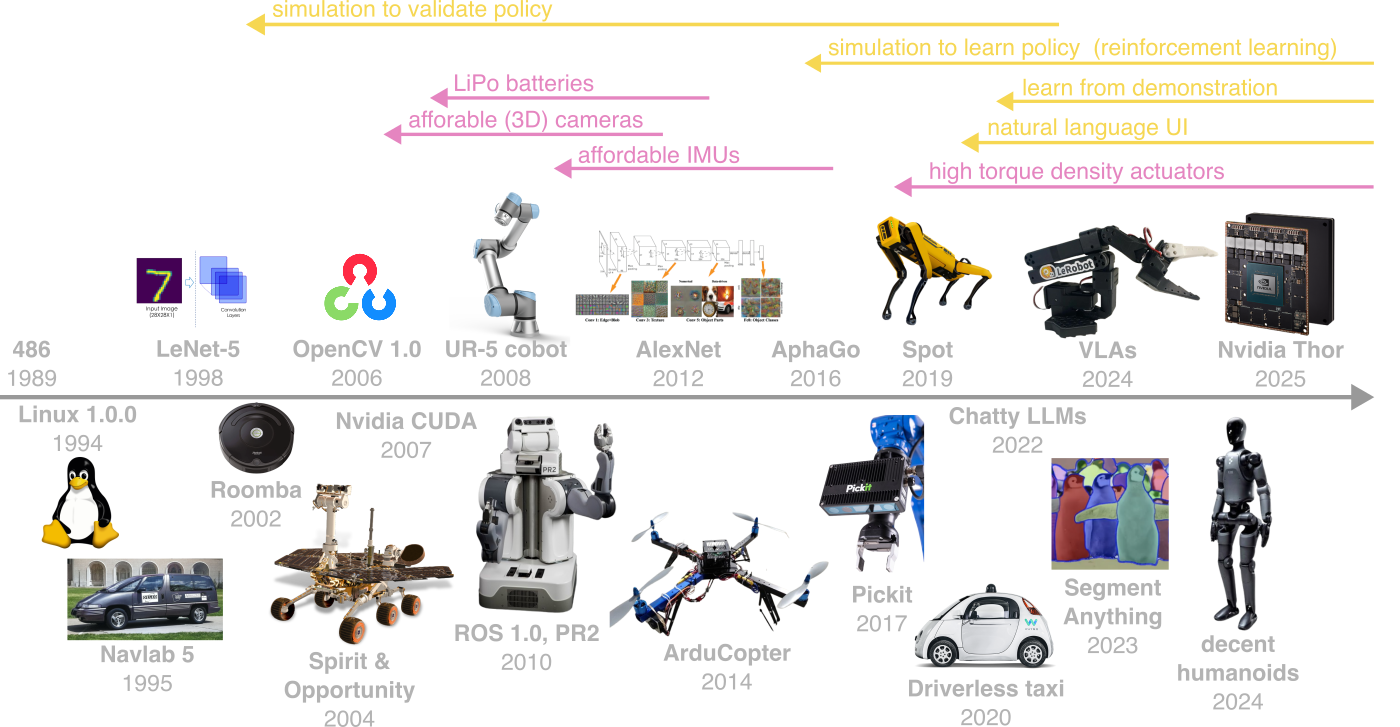

Before we go into projects, perhaps good to get some idea of where robotics is coming from and what direction it’s going in. We’ll cover what happened over the past thirty years at the hand of the timeline below containing over a dozen influential, era defining projects - some by now in a museum, like the Navlab 5 autonomous car, others, such as the Linux kernel, living stronger than ever.

In this timeline, there are two evolutions I’d like to touch on, and in particular, discuss how they’re influencing robotics. The first concerns hardware. The late nillies brought affordable batters IMUs and brushless speed controllers. Not long after, we started seeing quadcopters. A more recent improvement lies in high torque density actuators, which got translated into Spot, and since last year, humanoids that mechatronically - finally - are in the whereabouts of C-3PO. Finally, though not that known, is that powerful, small compute has become available only recently. This year Nvidia made the Thor platform public, bringing desktop specifications (128 GB RAM, 2070 TFLOP FP4 GPU) into a package only slightly larger than a Raspberry Pi. Coming years, we’ll hopefully see the applications of these in the form of truly mobile robots.

The second evolution concerns software - especially AI. Neural networks have been around for a while - for example, LeNet-5 went into production in 1998 - however, most robotics software still heavily relies on human-generated heuristics. Last year we got quite a bit closer with vision language action (VLA) models. Simulation is also playing a big role here. Simulation has been around forever. Initially, as a tool to validate policy; now as a tool learn policy. A key development here was AphaGo - which showed that super human performance on hard tasks is, well, possible. Since then, successful reinforcement learning applications trained (at least partially) in simulation are becoming more common. Related to simulation, is learning from demonstration, where the robot induces its task from an example, much like humans do.

Less so an evolution and more an observation - robotics evolves slowly. For example, Navlab 5 realized the first trans-America drive; however, it took until 2020 before the first driverless taxis got deployed. Similarly, the PR2 robot achieved the ability to fold T-shirts and towels; now, a decade later with VLAs being the hot topic, cloth folding demos are still considered state of the art. Robotics is hard.

Classic Stack

What’s a Classic Stack?

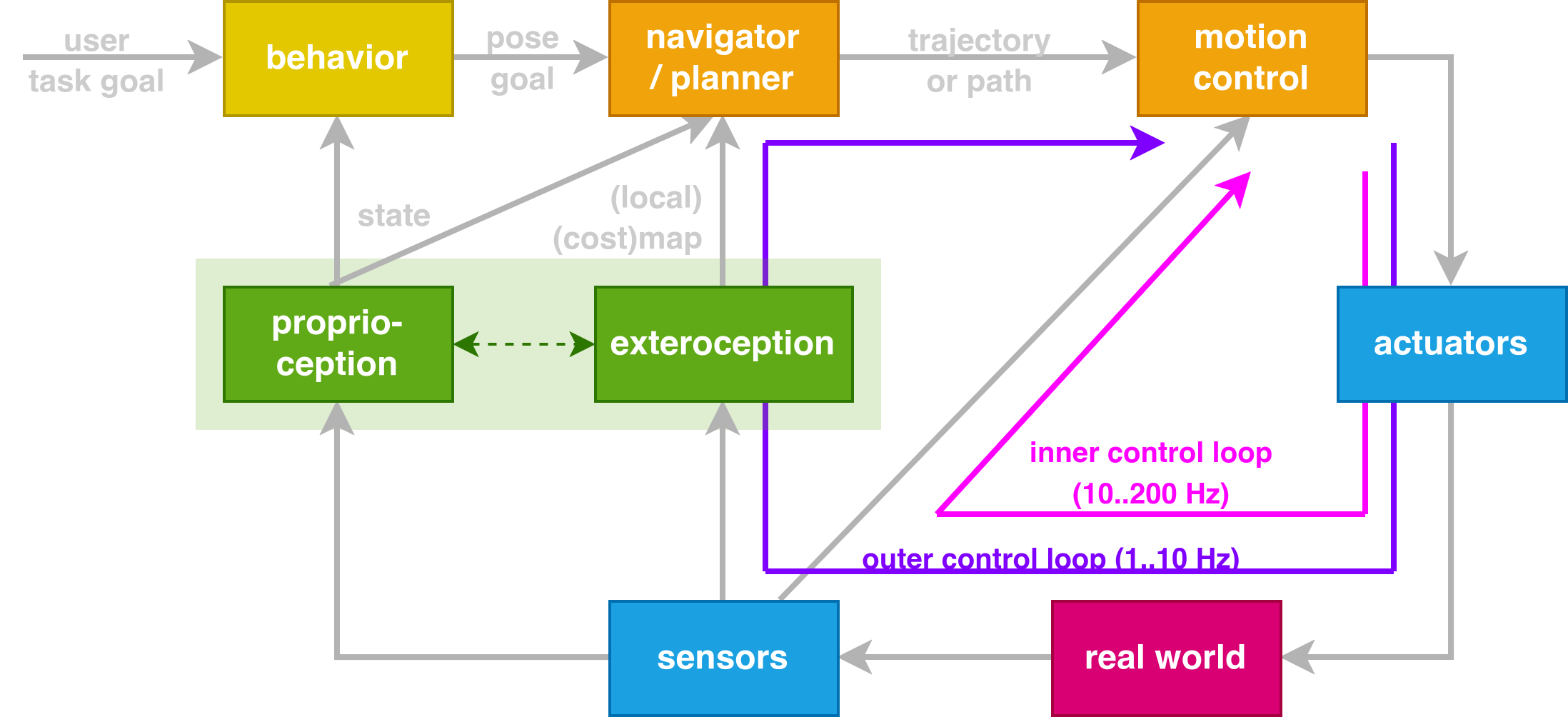

Before we discuss the open source software project, let’s 5 minutes to look at what this classic stack actually means. Though it has many variations, generally it boils down to the block diagram below.

Most high level, a behavior block takes in the user goal and decides on a high abstraction level what the robot ought to do. Things like, “open door” or “navigate to position X”. A second block, the navigator or planner, translates that rather abstract goal into something actionable from a control perspective, usually a trajectory or path. That trajectory or path gets passed to the motion control block, which then sends signals to the actuator to follow that plan.

There’s quite a bit of feedback occurring here. Most high frequency, about 10 to 200 Hz, is the inner feedback loop used by motion control, where the high frequency allows for smooth and precise motions. Wrapped around the inner loop, the outer control loop runs considerably slower due to its path going through compute-expensive blocks. Here we see the concept of cost maps (which describe the traversability cost in function of a position space) popping up, as well as adaptive path replanning by the navigator. For example, let’s say an obstacle pops up, the navigator will propose a new trajectory. The behavior block is usually also receptive to some input, creating a third feedback loop.

Perception, localization and mapping

First up is SLAM Toolbox - for simultaneous localization and mapping. It has the key ability to generate so-called floor plans, like the one below. To generate such one, the robot drives through a building with its 2D lidar returning arrays of angles and distances. SLAM toolbox subsequently optimizes the many scans into one consistent map. After the mapping phase, it localizes the robot in the generated map using that same 2D lidar sensor.

Though simple, a reliable localization system already enables many applications. For example, transporting parts in a factory between a warehouse and machines.

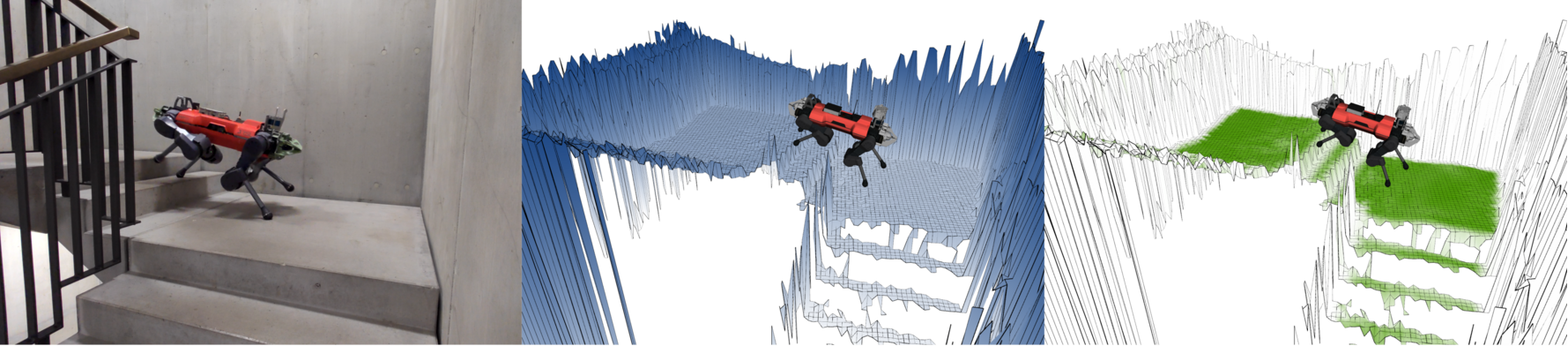

Elevation Mapping cupy constitutes a related project in this regard. Where SLAM Toolbox concerns itself with flat floors and 2D lidars, Elevation Mapping really shines in its handling of non-flat terrain, taking as input 3D lidar scans or depth cameras. Usually its generated height map is converted to a traversability one, after which it’s straightforward to use it in path planners. This makes it great for handling dynamic obstacle avoidance, as well navigating complex 3D environments like staircases.

Navigation, planning

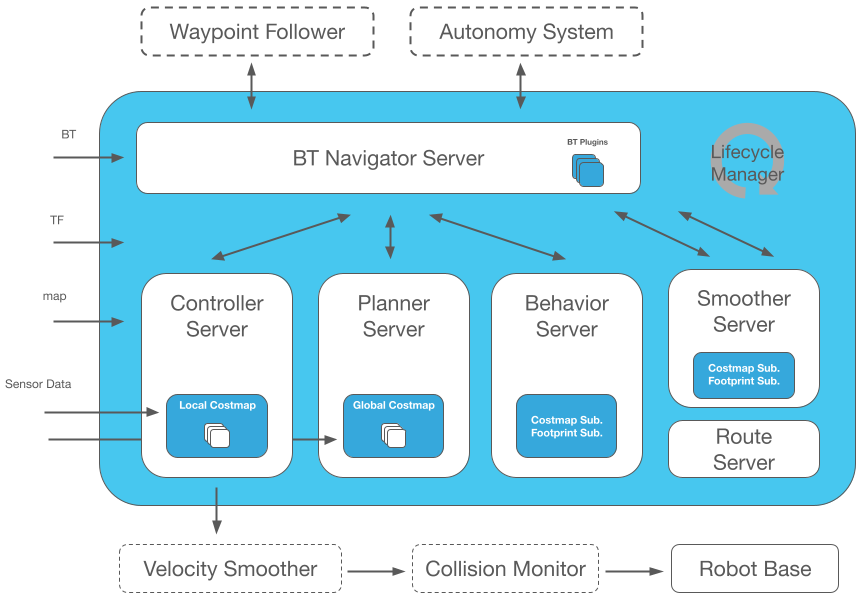

Talking about path planning, the reference project here is Nav2. As its architecture diagram below already suggest, Nav2 does a lot of things. (In fact, it incorporates the “classic stack” blocks of behavior, planner and motion control.) On top of that, its plugin system also makes it highly extensible.

Remember the floor plan mentioned in the previous section? We see it in the figure above visualized as black lines. (In this example, the robot’s driving around in a hexagonal room with nine pillars.) Next to that, note the cyan-purple coloring, presenting the cost maps. The cost map represents the cost of driving at a specific location. Here, it’s obstacle based: the further from an obstacle, the lower the cost. Using this cost map, the planner generates a path from one waypoint to one another (minimizing the cost), and the controller subsequently executes that plan.

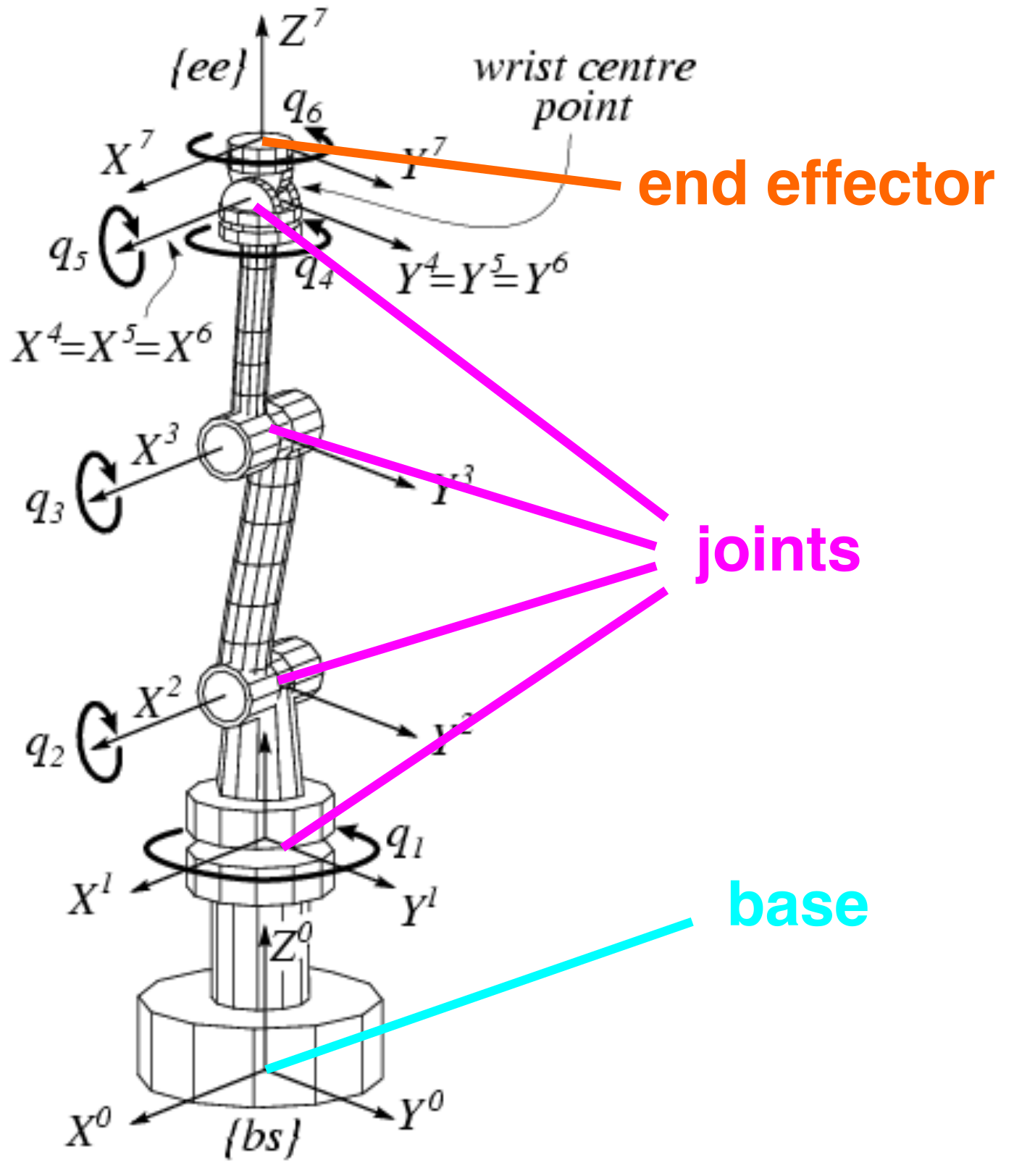

Like Nav2 is for wheeled and legged robots, MoveIt is for robot arms. Key differences are that here we’re working with 6D instead of 3D poses, and that we command several arm joints instead of only a twist reference. As a result, robot arm motion planning is surprisingly complex. The wide joint angle ranges make the exercise highly nonlinear; there’s also the matter of avoiding collisions, not just with the workpiece (expensive) but also with the arm itself (very expensive). As a result, the motion planning poses a high non-convex optimization challenge. MoveIt provides several algorithms suited to solve common scenarios.

Though a bit difficult to set up, once done, MoveIt eases robot arm control to commanding a desired end effector pose, and avoids about any imminent self-destruction.

Motion control

We have a trajectory to follow. What now?

When it comes to linear feedback controller design, the Python Control Systems Library is the tool of choice for all those who like Bode and Nyquist plots. In the past, one required the Matlab “Controls Toolbox” for that; now the pip package python-control allows you to do (almost) everything what Matlab does, while allowing the use of a modern programming language.

Where python-control is intended for design, ros2_control handles runtime execution of the created controllers in the form of a real-time capable motion control framework. It comes with several controllers, and is rather extensible with custom ones. Let’s say we require a PID velocity controller - ros2_control has it.

Behavior

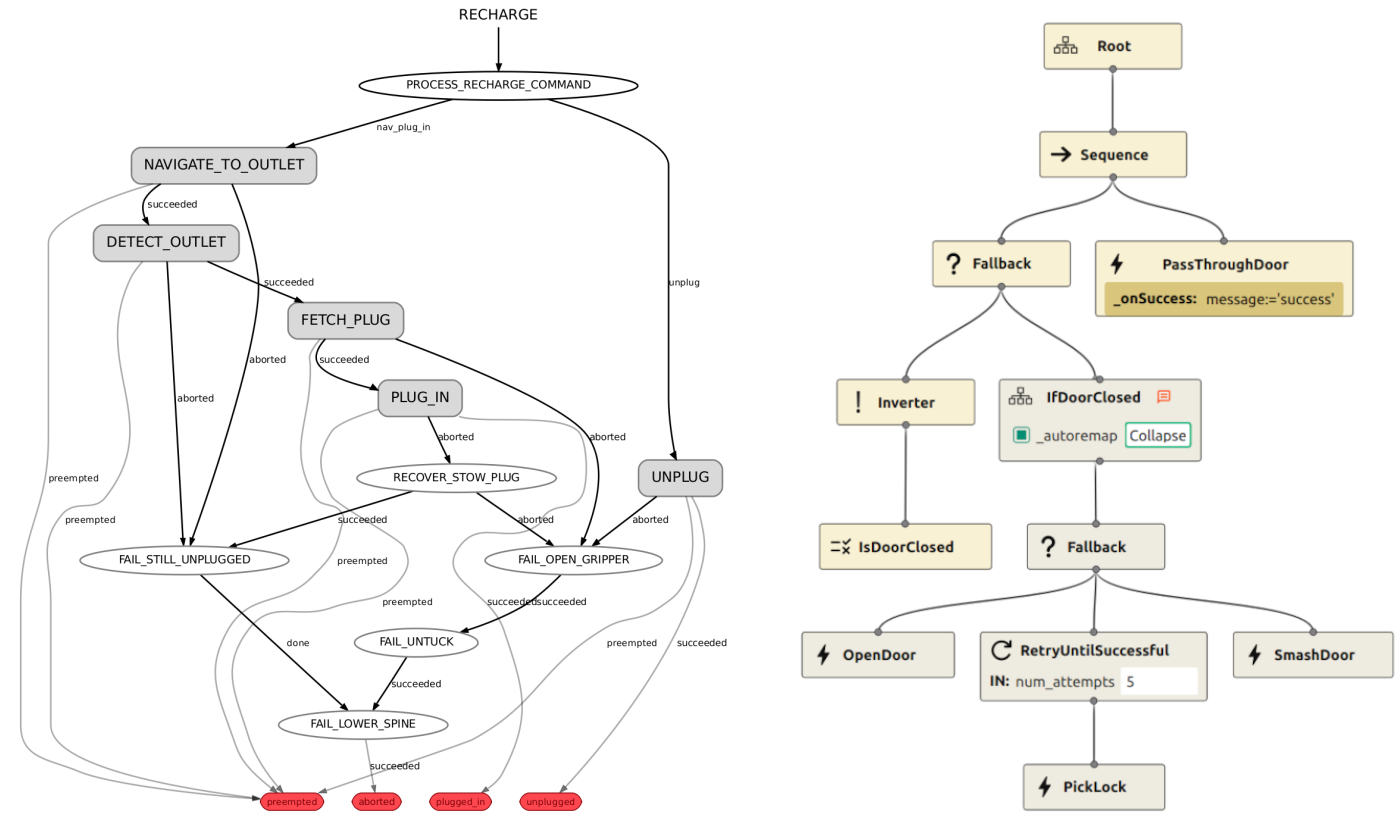

Final block to discuss is behavior. Two approaches are common here: state machines, and behavior trees. For both there exist a number of projects; we’ll discuss two here.

For state machines there’s SMACC, coming with all the bells and whistles: orthogonal state machines, hierarchical state machines, the possibility to combine these two, substates, event based … Based on the Boost Statechart Library, SMACC state machines compile to efficient machine code.

On the other hand, for behavior trees, we have BehaviorTree.CPP (BT.CPP), which got quite popular over the past few years. Its ability to be runtime composable (through XML files) is particularly nice. The figures below show a visual example of respectively a SMACC state machine and a BehaviorTree.CPP behavior tree.

If deciding between state machines and behavior trees, in general, behavior trees are considered easier to learn, and in any case, provide far fewer opportunities to shoot oneself in the foot. On the other hand, some applications with complex behavior benefit from the breadth of possibilities offered by state machines.

All-in-one: ArduPilot/Dronecode

The sections so far discussed functional blocks, still to be combined. Some projects, on the other hand, instead encompass the entire “classic stack”. One of them is ArduPilot - the tool of choice of when it’s about keeping things in the air.

In the ArduPilot project, we find all the discussed blocks - state estimator, low and high level controllers - as well as a simulation set-up and the Mission Planner, which offers a user interface for the pilot on the ground.

The ArduPilot project receives wide usage, especially where other (commercial) solutions fall short of fulfilling all requirements. For example, in Mission Spider Web Ukraine’s special forces relied on ArduPilot to eliminate more than a dozen bomber planes - worth billions - used by the invader. (screenshot in the figure below). For most of us, open source software is a way to express our freedom; for others, it’s a necessity to enforce their freedom.

For most of us, open source software is a way to express our freedom; for others, it’s a necessity to enforce their freedom.

Modern Stack

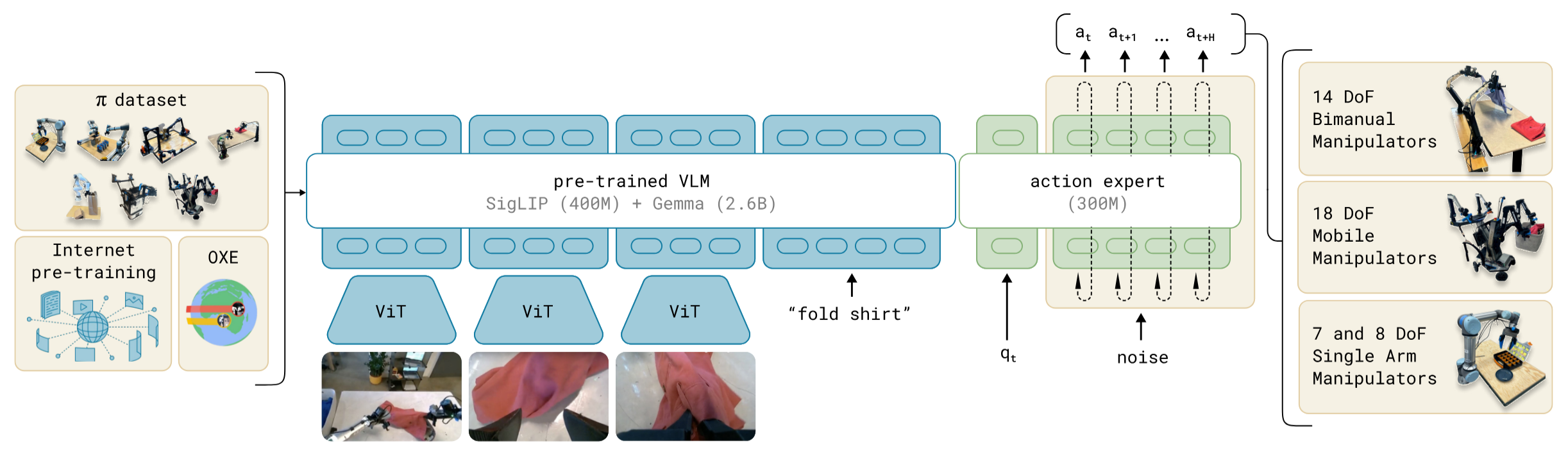

VLAs

First of - forget everything about the “classic stack” and its half a dozen blocks of the previous sections. All of it in the garbage can in favor of an end-to-end neural net. End-to-end in the sense that we feed in images (from cameras mounted on the robot) together with the desired activity (for example, “fold shirt”), and the joint angles setpoints roll out. After many attempts, these vision language action (VLA) models are finally delivering something close to usable behavior. The figure below shows the simplified architecture of one such a setup.

Let’s briefly discuss how these work in more detail. The VLA consists of two parts - a vision language model (VLM) and an action expert. The VLM is a rather classic piece of kit, used also in several non-robotics applications. Simply speaking, it compresses the images and desired activity into an embedding that describes the current system state, as well as the desired future state. A second network, in green in the figure above, then generates the desired motor control based on that embedding.

Concerning network structure, the VLM is almost universally transformer based, while for the action expert, we see both transformer and diffusion models. In case of transformers, it’s much like an LLM, except its tokens aren’t parts of words but joint angles over time. And in case of a diffusion network - the same type as used in image generation - we don’t operate on pixels but instead joint angles over time.

Important to know, is that these are pretty small models. Due to not requiring that much memory, they run fine on a recent desktop after adding a $300 GPU (such as the Nvidia RTX 3060 12GB).

The training of these networks is done in multiple steps. First, the VLM is trained to learn core capabilities, like detecting an object in the image and relating that to the desired action specified in natural language. No need to do that ourselves, plenty of pre-trained VLMs available. Second part is the training of the action head. Here, a mix of learning in simulation and by imitation is usually used.

After initial training, one usually needs to fine tune the robot on the particular task of interest. This is done by using a set of a leader and follower arm (both pictured below). The human teacher executes the task a few dozen times, each time with slight variations (for example, part in a different location), and does so by commanding the follower arm through the leader arm. Thereafter, the network is trained on the recorded data.

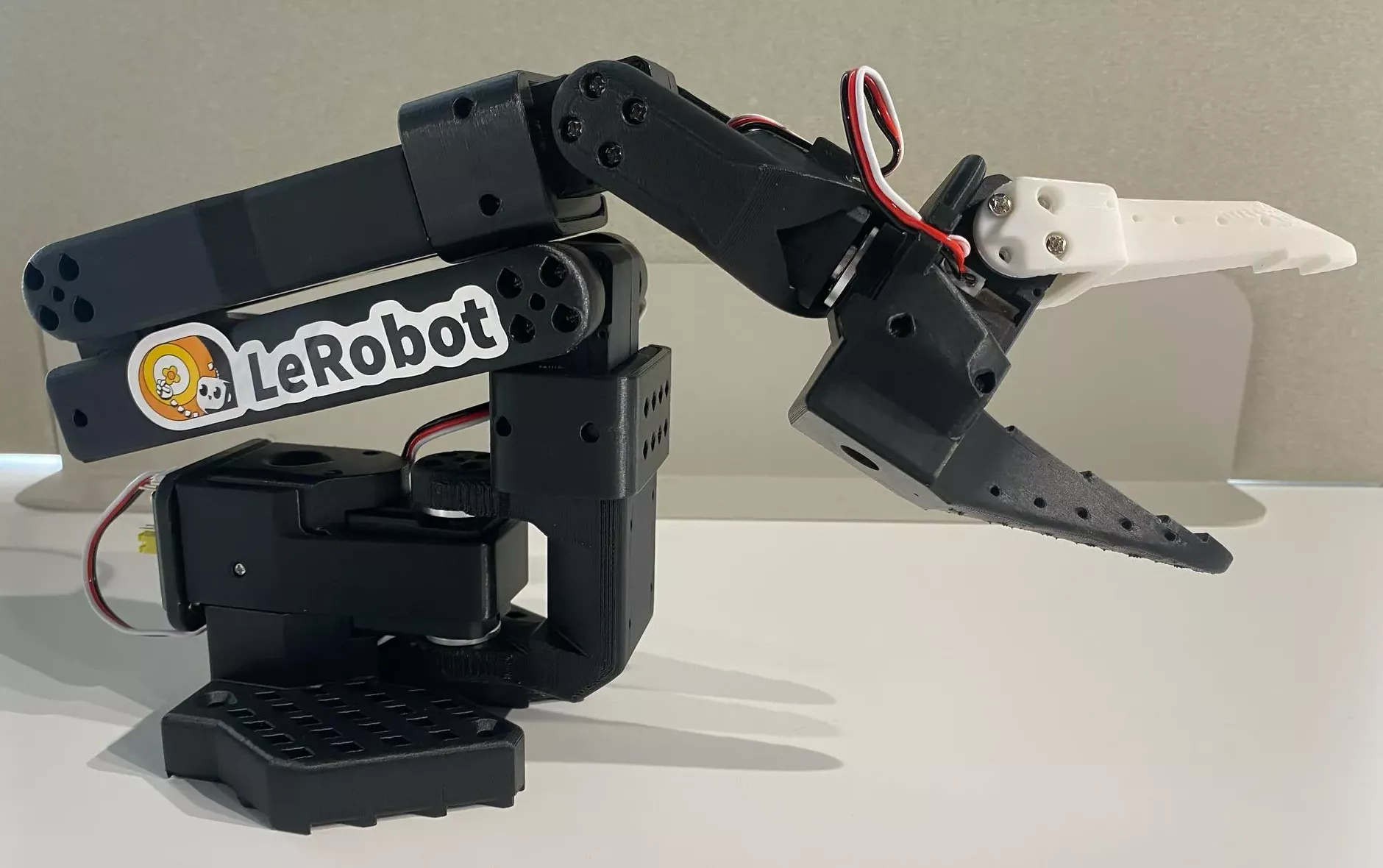

LeRobot

How about doing this ourselves, more specifically, doing things open source? The LeRobot project has been transformational in this regard by offering the base models and training infrastructure, as well as a large set of training data gathered by the community. The open source hardware design of the SO-1O1 arm (picture above), which relies on hobby servos and 3D-printed parts to bring down the cost (€114 per arm) makes it also attainable from a financial perspective. The video below shows an example of what performance one can expect.

Keep in mind here - the network here is end-to-end. There’s no planning block, no inverse kinematics block, no object detection block. It’s all ‘programmed’ in neurons.

Foundations

Communication, interfaces, storage

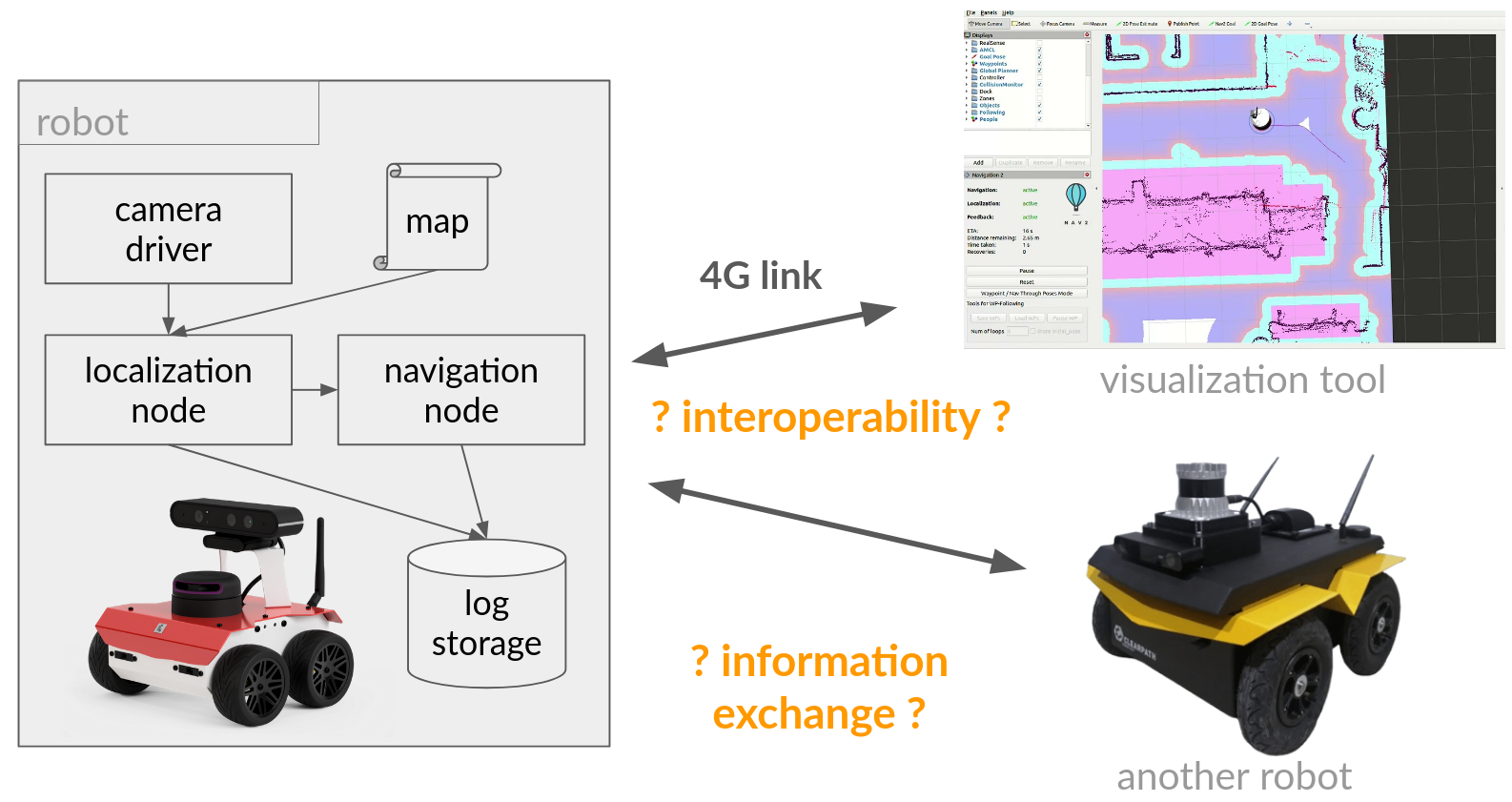

To introduce this part, let’s ask ourselves the questions Why do we need this? What challenges do we face? To do so, we’ll go through the robotics project in the figure below. On the left side, we have a single machine where we integrate several software pieces and want communication between them. There’s also the aspect of data recording: we want to save sensor data and replay it later for debugging purposes. Speaking of development, there’s a desire to visualize what the robot’s doing. This usually runs on a separate machine, connected over Wi-Fi or 4G. In some applications, we have several robots, and require robot-to-robot communication.

In all of these cases, two question arise. The first is one of interoperability. How do we make that people building software, end up building compatible software, without having to discuss every detail with everyone else (which doesn’t scale in a federated open source development model)? The second question concerns the information flow itself: how do make that data moves to where we want it to?

ROS messages

Over the past two decades, the open source robotics community developed three answers for these questions. Let’s start with the interoperability question. ROS (Robot Operating System, more on that later) introduced standardized ROS messages. There are hundreds of these, covering all the usual robotics data types like poses, images, cost maps, joint states… and are also user-definable. Elementary here is the rosidl_generator, which provides the tool chain to compile well-readable .msg files into code usable in your user application, whether that be C++, Python or Rust. So when you say “my application publishes a PoseStamped”, I know exactly what you mean - and I can even build my code without ever having seen your application.

# A Pose with reference coordinate frame and timestamp

std_msgs/Header header

geometry_msgs/Pose pose

Apart from user applications, message definitions are used by many tools. First is recording - these definitions allow efficient encode and decode to binary without there being any chance of confusion. It’s also used extensively in visualization, like, many visualization tools (such as RViz, more on that later) provide visualizations dedicate to specific messages. Finally, communication middleware (which is software used to make different applications communicate with each other) may also use the generated code.

Good to know - ROS messages tend to be composed of several existing messages, promoting reuse and avoiding duplication. For example, the Header message consists of a timestamp and a coordinate frame id.

ROS APIs

So we have messages. How does using them go about in practice? Actually, what do we need to set up a minimal robot application? This is where the ROS APIs truly shine. Observe the ROS example below: in just 22 lines of code, one sets up a ROS node that publishes the string “Hello world!” twice a second (receivable by other processes, on this as well as on other networked machines). Less obvious is all the code that wasn’t required to be written, and what was made abstraction of: the used communication method (inter-robot? inter/intra_process? shared memory? TCP/UDP?), handling (re)connection, connecting to listening nodes, message serialization, I/O buffering, callback triggering…

import rclpy

from rclpy.node import Node

from std_msgs.msg import String

class MyPublisher(Node):

def __init__(self):

super().__init__('my_publisher')

self.publisher_ = self.create_publisher(String, 'my_topic')

timer_period = 0.5

self.timer = self.create_timer(timer_period, self.timer_calback)

def timer_calback(self):

msg = String()

msg.data = 'Hello World'

self.publisher_.publish(msg)

if __name__ == '__main__':

try:

with rclpy.init()

rclpy.spin(MyPublisher())

except:

pass

Making this possible is the abstraction layer introduced by ROS which makes that - for the most part - the user application only has to concern itself with those parts that relate directly to its desired functionality. Apart from being a big time saver, this also helps tremendously in building compatible and maintainable software. If you publish messages through the ROS API, then I’ll be able to easily receive them, just by using the ROS API.

Less obvious is all the code that wasn’t required to be written, and what was made abstraction of

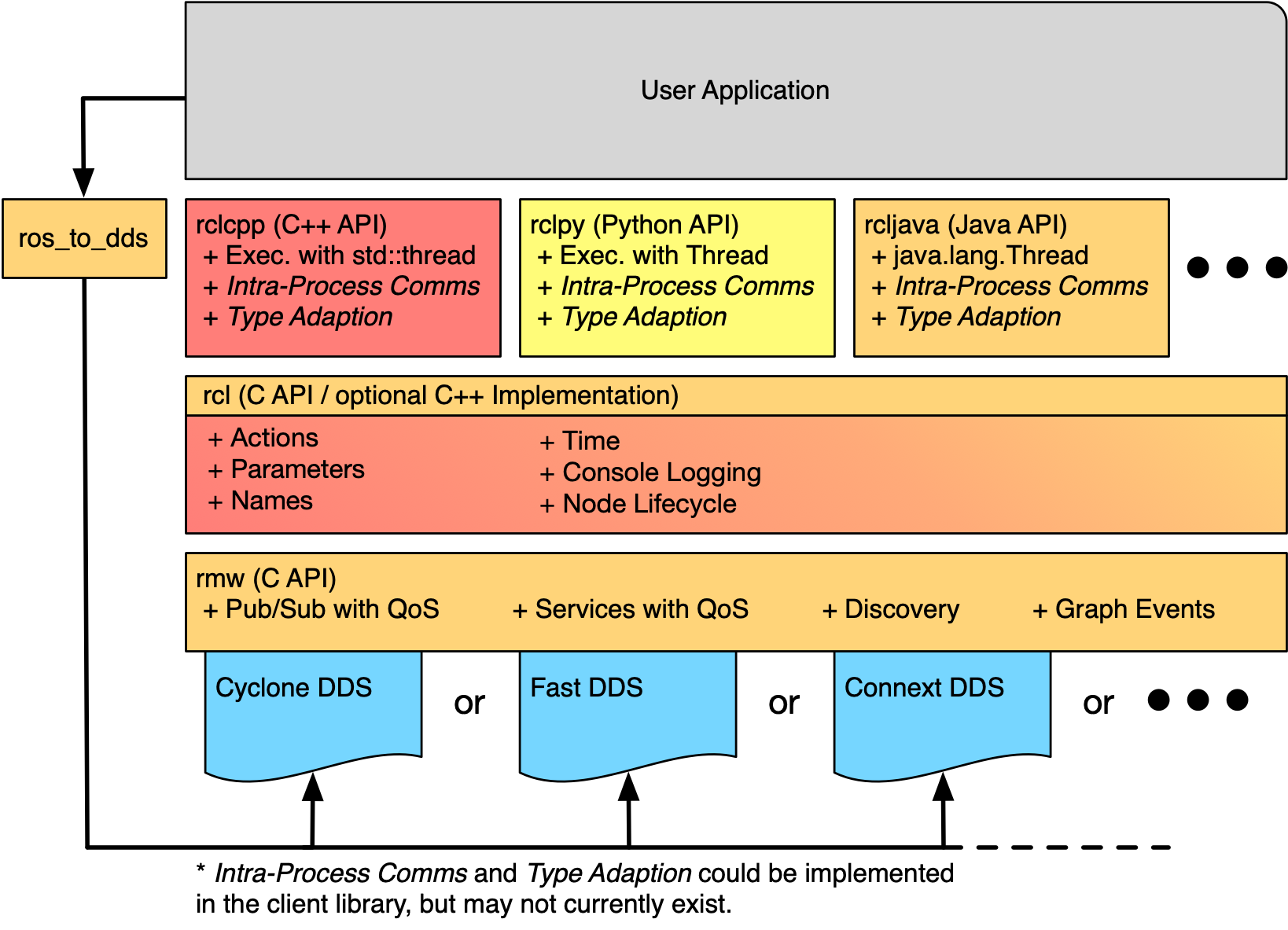

The third asset is flexibility. For example, in ROS 2 (unlike in ROS 1) the communication middleware isn’t fixed: one can choose one that suits their needs best. Such as one of the DDSs. Or go the way of the dragon: Zenoh. Let’s talk about that.

Performant and flexible communication - Zenoh

Passing data in robotics systems is surprisingly difficult. Two key factors here are the extreme variability and the large data rates. Robotics communication is just about “everything and the kitchen sink”: from small messages of less than a kB that must arrive with low latency at several hundred Hz, to multi-megabyte images; and from interprocess to multiple machines over perhaps transatlantic networks (including NATs, Wi-Fi and 4G links). The amount of data to move doesn’t help - especially when using cameras, seeing several Gbps passing by is common.

Zenoh answers the call for this. Designed from the ground up based on the experience gained from ROS 1’s middleware and several DDSs. The result: communication that “just works”. Although some configuration and set up remains required, it already automatically adapts to many of the common scenarios.

ROS

The name Robot Operating System (ROS) already passed by a few times. Being one of the three Open Robotics projects, it provides “a set of software libraries and tools for building robot applications”. Next to the already discussed message standards and communication APIs, ROS also provides key libraries such as tf, param and launch, extensive developer tooling (visualization, simulation, command line tools etc.) as well as binary distributions.

Being active in the space for almost two decades, ROS has already built an enduring legacy that, thanks to its open source nature, will continue well into the future.

Core algorithms

The above projects rely extensively on a set of algorithmic software libraries. A reference in image processing, OpenCV implements the core image operations as well as many related algorithms, such as those for camera projection, calibration and PnP solvers. If you’re working with point clouds instead, PCL (Point Cloud Library) is your friend, providing tooling around 3D geometry, surface reconstruction and outlier filtering, as well as much more. More interested in linear algebra? Sure, here the header-only library Eigen provides data structures for matrices as well as many operations on them (inverses, decompositions etc.). Of note is that one part of Eigen also focuses on geometry applications, with for example good support for homogenous transformation matrices. (If you prefer Python, Numpy has similar goals and functionality.)

If you’re dabbing further into mathematics, you’ll probably also at some point encounter derivatives, for example when formulating a nonlinear optimization problem. Here, Ceres Solver has got you covered with its compile-time algorithmic differentiation (implemented using C++ templates), and on the plus it comes with some solvers.

For planning and navigation, two projects are of particular interest, namely CasADi and FATROP. The former provides algorithmic manipulation and differentiation (quite nice is its Python interface) with an interface to the popular general purpose ipopt solver. The latter, FATROP, is a nonlinear constraint optimizer focused on model predictive control (MPC) applications. For this application, it is currently the fastest FOSS solver available.

Development tooling

Robots do not build themselves (yet); and in this building process, one desired to get insight in what’s going on, in particular what’s not working as it should be. Two vital tools here are visualization and simulation, the topics of the two following subsections.

Visualization

PlotJuggler, also known as the GOAT of time series visualization, is easy to use and visualizes exactly what you want it to. Also, where other tools focus on the visualizations, PlotJuggler’s flexibility lays in no small part in the many data formats it supports: live ROS streaming, ROS bags, MQTT, ZeroMQ, PX4, CSV… (It also supports data format plugins.) This makes PlotJuggler a tool of the trade for everyone involved with robotics.

Robotics data consists for a large part of spatial information, both in 2D (images) and 3D (point clouds, robot body, cost maps, …). RViz, with its 3D viewport, is usually the tool of choice to visualize these. With customized visualizations for many ROS messages, RViz provides excellent visualizations for many ROS projects with little configuration required.

RViz does not support opening bag files, nor time series visualizations. In practice, this is a non-issue because PlotJuggler can republish messages, achieving the result shown in the video below.

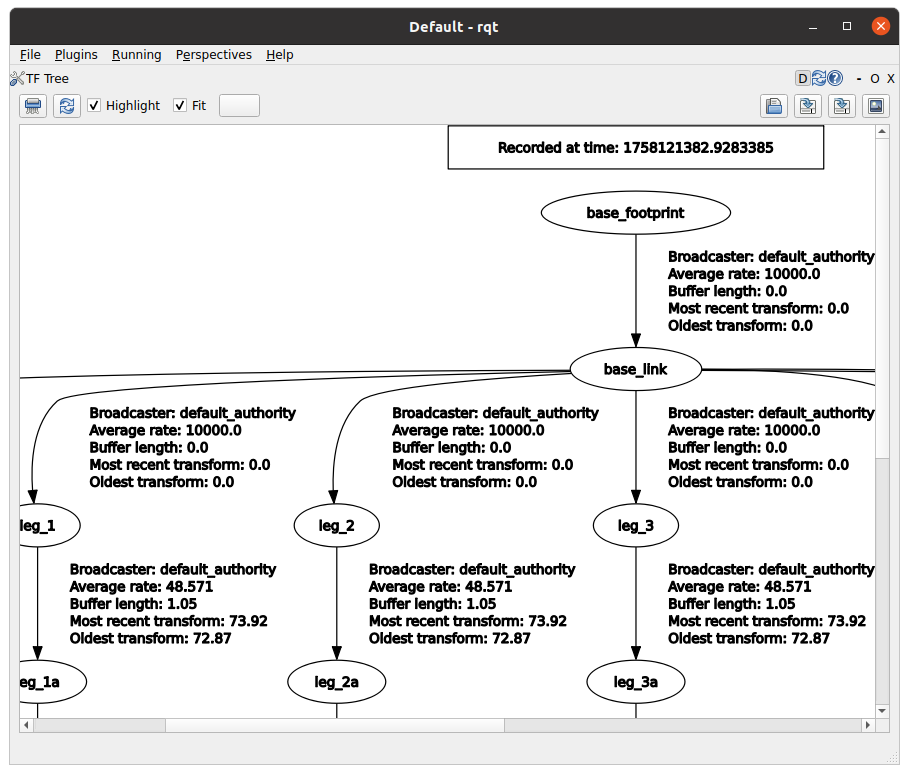

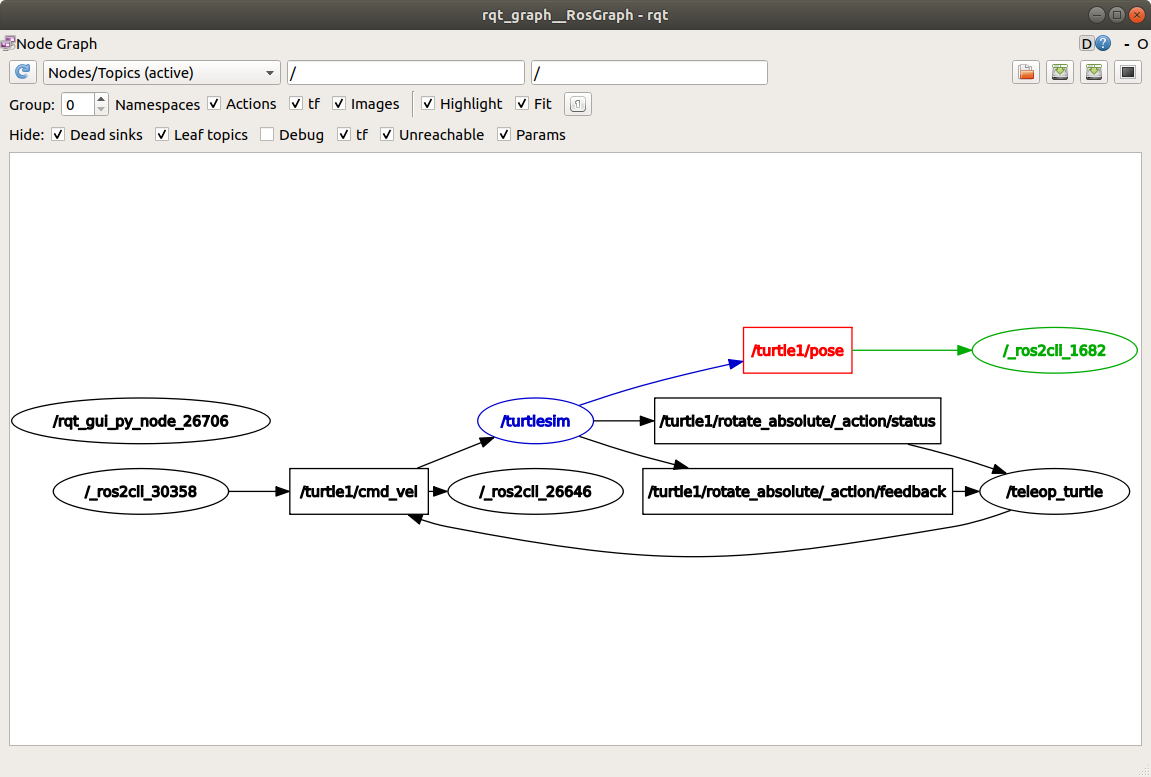

PlotJuggler and RViz visualizations are fairly straightforward, in the sense that images and time series already have a spatial meaning; next to that, particular value lies in visualizing the many parts of a robotics system that aren’t inherently visual. RQt, which combines many small ROS utilities, is particularly powerful in this regard. Two of its key abilities are visualization of the ROS node graph (showing how ROS nodes are linked together by their communication ports) and that of the TF graph (which shows where frames situate themselves in the transform tree).

source of the node graph screenshot

Simulation

The breadth of simulator projects might come across as confusing at first; why would one need so much choice? The answer here lies in the many reasons behind simulation use; we’ll touch on five key ones here. A first is that the robot’s hardware is not (yet) available. This is not just a reality for hobbyists; it’s part of the parallel development workflow at many companies, where hardware are software are developed simultaneously to reduce time to market. The desire for a more controlled environment poses a second reason. Sensor data is inherently noisy, and so are actuator actuations. When something doesn’t work, the question that often arises is whether this is caused by the hardware, or by a bug in a particular software. The possibility to run the robot with perfect sensing and actuation allows testing of only a particular part, easing the process of narrowing down the error.

Related to that, automated testing (tests run on PRs) is becoming a standard workhorse in many development processes. Though it won’t catch all issues (because simulation isn’t as good as reality), it’s pretty effective at keeping core functionality void of “small stupid mistakes”.

In parallel, a fourth use concerns synthetic data generation for AI training. Where classic approaches require annotation by humans, in a simulator the ground truth is inherently available. These simulators tend to focus on visual realism. Finally, some AI approaches, in particular reinforcement learning (RL), requires live environment interaction. Simulation, though less realistic than the real world, is a boon here because of its ability to run far faster than real time, as well as in parallel without needing several physical robots. As a result, these simulators tend to be light on resource use.

Gazebo, an Open Robotics project, traces its origins back to the University of Southern California in 2002. Being developed for robotics from the start, it integrates easily and provides many sensor models. More recent, and coming from outside the robotics community, is Open 3D Engine (O3DE). Over Gazebo, it has the edge when it comes to large environments and photorealistic rendering.

Finally, the new kid on the block, Genesis, deprecates many of the assumptions formerly held about FOSS simulators. Its universal physics engine is of particular interest in special use cases, for example underwater manipulation. Being released less than a year ago and general purpose, Genesis still has rough edges when it comes to using it in a robotics context. However, the already present capabilities and that its development is still in full swing, there’s assurance that these will be ironed out soon.

definitely check out the full video

Reflection

Reflecting on the journey of robotics software, we notice on one hand the maturation of core technologies, where approaches developed over the past decades are joined together into unifying frameworks; and on the other, new, transformational technologies carrying great promise. Open source robotics is more than a few projects; it’s a thriving community supported by students, researchers, hobbyists as well as many companies. Infrastructure to turn dreams into physical reality, available to anyone.

There is little reason to think the coming years won’t see any less change. From trailblazing projects like LeRobot and the Genesis simulator, to more mundane yet no less influential projects like Nav2 and Zenoh, our collective quest for improvement endures. The universal accessibility motivates me - it ensures that the work will be to the benefit of everyone, that there can be no gate keeping.

Perhaps most importantly, it proves, yet again, that the open source approach, is the approach that lasts. Let’s continue this journey together, making the dream of advancing robotics a shared reality.

I hope you all enjoyed this (long) write-up; if any particular open source robotics project that made a difference for you personally came to mind, I’d appreciate if you could leave a brief note below.

Enjoy Reading This Article?

Here are some more articles you might like to read next: